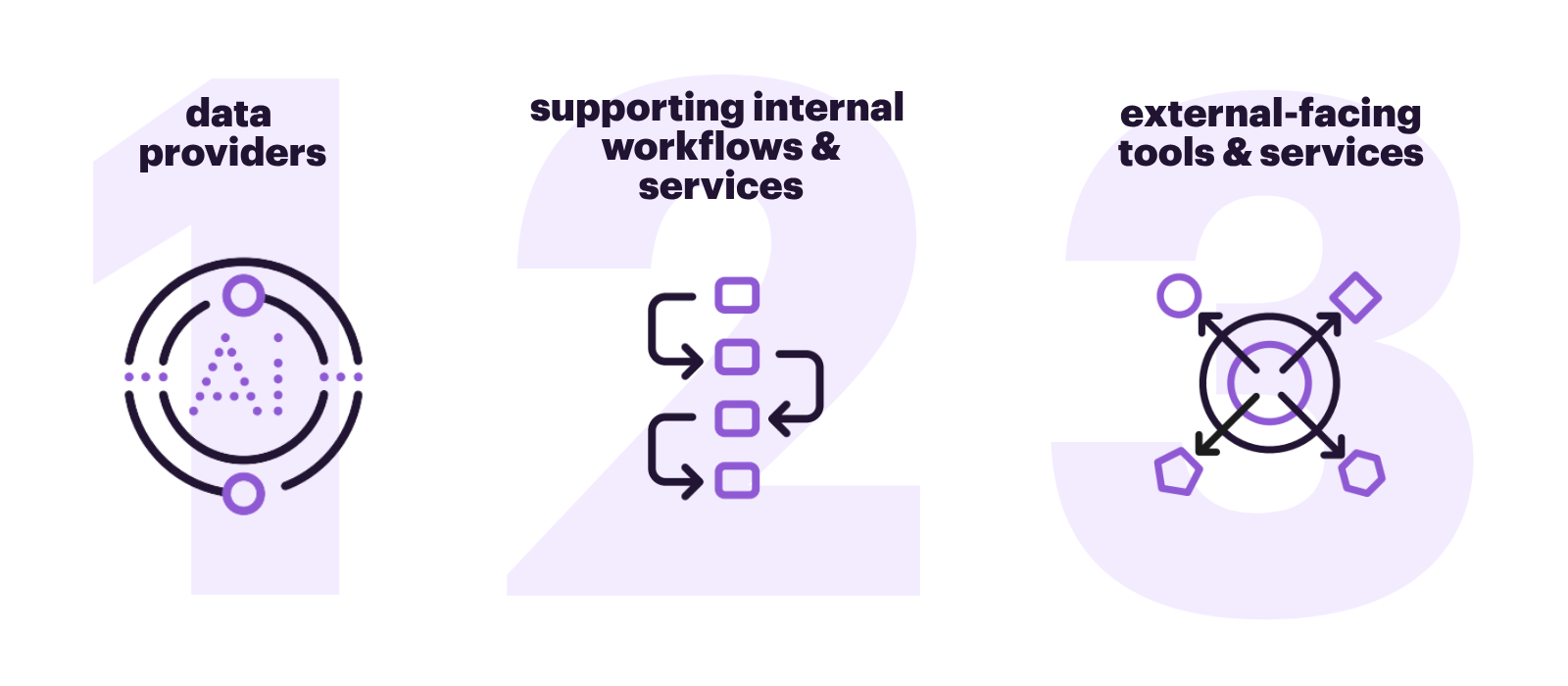

AI has become an integral part of how research is shared and improved—supporting everything from editorial workflows to personalized discovery. It supports:

- Article recommendations and dynamic classification

- Enhanced search and browse functionality

- Image validation and annotation

- Authorship and editorial matching

- Content enrichment and structuring

Such integration enables better access to knowledge and streamlines the dissemination of trustworthy research. (Source: AI Ethics in Scholarly Communication).

The role of AI in peer review is at the center of an active and rapidly evolving debate. Perspectives are shifting, and the following resources offer valuable insights to help navigate this critical conversation.

Our members have long pioneered the responsible use of AI, applying it to content creation, workflow optimization, and the development of innovative tools.

- Springer Nature publishes its first machine-generated book

- Springer Nature advances its machine-generated tools and offers a new book format with AI-based literature overviews

- New Plain Language Summaries of Publications Unlock the Latest Medical Research for Patients, Healthcare Professionals and Policymakers – Taylor & Francis

- OUP Oxford Law Pro

- Bringing Generative AI to the Web of Science | Clarivate

- Wiley Announces Collaboration With Amazon Web Services (AWS) to Integrate Scientific Content Into Life Sciences AI Agents | John Wiley & Sons, Inc.

- ScienceDirect AI: Eureka, every day | Elsevier

- Elsevier takes Scopus to the next level with generative AI

- Elsevier introduces Embase AI to transform how users discover, analyze and draw critical insights from biomedical research

- Elsevier introduces Reaxys AI Search, enabling faster and more accessible chemistry research through natural language discovery

To fulfill its promise, AI must be used responsibly and in alignment with the ethos of science. STM advocates for:

- Accuracy and reliability: AI should operate on the final version of record (VoR) to ensure the most vetted, updated research is used.

- Transparency and provenance: Systems must disclose sources, training data, and provide traceable references to maintain scholarly integrity. Many scientists are already concerned about downstream reuses of their works due to possible misrepresentations or misuse of their data for political gain.

- Human oversight: Despite AI’s capabilities, human expertise remains essential to uphold the quality, trust, and accountability of scientific publishing.

Source: AI Ethics In Scholarly Communication

Also reference: Recommendations for a Classification of AI Use in Academic Manuscript Preparation

Academic publishers are compiling guidelines for their authors providing guidance on correct and transparent use of AI in preparing manuscripts for publication. Reference: Policies on artificial intelligence chatbots among academic publishers: a cross-sectional audit | Feb 2025, Springer

Despite all the potential gains, AI could also negatively affect knowledge production and dissemination in the research ecosystem if not handled carefully – and exacerbate an already pervasive blur between fact and fiction.

AI’s capabilities can amplify misinformation, especially when used without proper guardrails. The “hallucination” problem—where AI generates false or misleading content—poses a threat to scientific credibility and public trust.

Much like the spread of fake news through social media, scientific misinformation could erode societal trust in research and decision-making. Responsible stewardship is vital to counter these risks.

Society and policy-makers need to be able to trust scientific information to make evidence-based decisions, and researchers need to be able to drive innovation and discoveries that are so central to competitiveness and other societal benefits.

Recognizing the dual nature of AI, STM is leading initiatives that use AI to protect research integrity. The STM Integrity Hub exemplifies this approach, offering:

- A shared, cloud-based infrastructure for detecting integrity issues

- Integration with trusted tools like Springer Nature’s AI-powered text detection system

- A human-in-the-loop model to ensure editorial discretion and accountability

This balanced approach ensures AI supports—not replaces—the essential work of human reviewers and editors.

Explore the STM Integrity Hub | A unified approach to safeguard research integrity

LEARN MORE

The latest AI news from STM

In the Media | Times Higher Education — “Unseen efforts to catch paper mill outputs bear fruit”

In an article on growing threats to research integrity, Times Higher Education covers STM’s report Safeguarding Scholarly Communication: Publisher Practices to Uphold Research Integrity. The article describes how publishers are increasingly focused on identifying integrity issues before publication—responding to paper mills, AI-enabled fabrication, and coordinated fraud networks—while scaling up research integrity teams and collaborating on…

STM supports Copyright Alliance brief in key U.S. copyright case

STM has endorsed an amicus curiae brief filed by the Copyright Alliance in the ongoing U.S. appeals case Thomson Reuters v. ROSS Intelligence. The case raises important questions about copyright protection for editorial content — including material similar in nature and function to content produced by STM’s members. The case also presents a set of facts under which the lower court rightly found ROSS’s…

A recap: STM Integrity & Innovation Days 2025

On 9–10 December 2025, STM’s annual Innovation & Integrity Days brought together publishers, startups, funders, researchers and infrastructure providers for two days of focused, cross-sector collaboration in London. Now in its third year (building on the legacy of STM Week), this year’s Innovation & Integrity Days reflected a noticeable shift: more dialogue across traditional boundaries, more…

Inside STM’s November visit to Japan: key themes, takeaways & what’s next

In early November, STM CEO Caroline Sutton spent several days in Tokyo meeting with funders, government leaders, research agencies, and publishing groups — alongside delegates from STM’s Japan Chapter. As in last year’s visit, the conversations were productive, wide-ranging, and grounded in strong local partnerships. And while open science dominated the agenda in 2024, this…

JOIN

JOIN